The Law of Conservation of Testing Effort:

- Like energy, the total testing burden for a piece of software is constant. It can’t be added to nor reduced, only transformed.

- However, the cost to your business (in terms of time, money and trust) of these different forms is variable.

Here’s an example.

Let’s say I’ve been tasked with adding a new screen to my company’s mobile app. Let’s also say I’m not in the mood for following TDD right now. I just want to see the new screen in the app as soon as possible.

So I go ahead and write the feature without any tests.

Since I’m still somewhat of a responsible developer, I realize I need to test the scenarios for the new screen before shipping it. It’s just that now I have to test them manually.

Luckily, everything works the first time. Nice. I just cleverly avoided spending an extra hour of tedious development time writing tests.

Or did I?

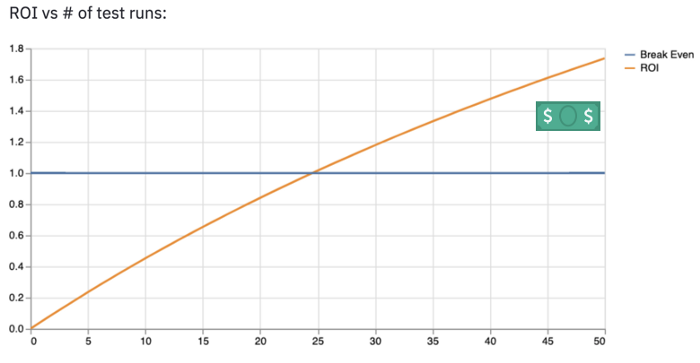

Notice that I didn’t actually dodge any testing responsibilities. I simply exchanged up-front, deterministic, slow-to-write, fast-to-run tests for after-the-fact, non-deterministic, free-to-write, slow-to-run tests. This seems like a good deal if I only need to run the tests once.

In reality, software always needs changing. Software that doesn’t change is obsolete, or soon will be, almost by definition. So changes to my new screen will inevitably be needed. And with every change, the tests need to be run again, and the prospect of doing them manually becomes less and less attractive. This shifts the cost-benefit equation in favour of the up-front, automated tests.

Now, on to a more extreme example.

Let’s say I’m a less responsible yet even more confident developer (not a good combo). After coding the new screen, I just give it a quick smoke test. The screen shows up with the expected content. Nice. Ship it. I go and get a coffee, satisfied with my speed and skill as a developer, having cleverly dodged both automated testing and manual testing.

What about the burden of testing the different edge cases for the new feature? Did it evaporate? No, it was merely shifted onto the users – unbeknownst to them. The testing effort remains constant but the cost has changed. The immediate, measurable cost of developer time is exchanged for a delayed, less-measurable cost to your product and company’s reputation – i.e. lost trust with your users as they discover bugs that could have easily been found prior to shipping. It’s not hard to see how this will ultimately impact your company’s bottom line.

Now, am I making the case that you should work to predict all possible errors for all conceivable scenarios before shipping anything? Not at all. Due to the ultimately unpredictable complexity of a real production environment with real users, there is a class of errors which you can only find out about by shipping. Some of the testing burden forever rests on your users.

Also, too much time spent trying to predict errors comes with its own costs. As Charity Majors points out in her article I test in prod:

80 percent of the bugs are caught with 20 percent of the effort, and after that you get sharply diminishing returns.

Failing to ship fast enough deprives your users of valuable-but-imperfect software and deprives your team of valuable learning. This learning is absolutely crucial in adapting the product quickly enough for the business to survive.

The implication of the Law of Conservation of Testing Effort is not that all testing should be done before production. It’s that attempting to dodge the kinds of testing more appropriate to pre-production merely shifts the burden into post-prod, where it costs more.

The phrase “I don’t always test, but when I do, I test in production” seems to insinuate that you can only do one or the other: test before production or test in production. But that’s a false dichotomy. All responsible teams perform both kinds of tests. [Emphasis mine]